Abstract

Many who have studied the issue agree that warfare is becoming more and more complex. Yet, while the complexity of war is certainly increasing, our perception of the problem is also coloured by our increasing consciousness of war’s complexity. Coping with this situation has proven difficult, as the long—and still-continuing—learning process witnessed in Iraq and Afghanistan demonstrates. The Australian Army has risen to this challenge with the release of Adaptive Campaigning and the launch of the Adaptive Army initiative. Providing the theoretical foundation of these approaches is ‘complex systems science’, a type of interdisciplinary research into complexity. While many in the Army are familiar with Adaptive Campaigning and the Adaptive Army, few are familiar with complex systems science. The purpose of this article is to provide an accessible introduction to complex systems science, and demonstrate how its principles are reflected in the Australian Army’s adaptive approach.

Indeed, the ability to adapt is probably most useful to any military organization and most characteristic of successful ones, for with it, it is possible to overcome both learning and predictive failures.

- Eliot Cohen and John Gooch, Military Misfortunes 1

You do not have to read too far in contemporary military theory to encounter the assertion that war is becoming more complex. There are clearly some objective features of the modern world that support this claim: it is more networked, information flows faster and further, and armies are larger than in times past. Yet complexity itself is not a new feature of warfare. The newness is at least, in part, our understanding of how to cope with it. We used to think that we had to rely on the mysterious and fickle genius of the heroic commander. With the industrial revolution, the planning and decision-making process gradually built up a well-oiled machine to reduce reliance on individual genius. This machine had many moving parts and specialised functions that needed to be synchronised and deconflicted with hierarchical control in detail. We now know that this mechanistic approach is useful for solving complicated problems but does little to address complexity.

For the past twenty-five years, the subject of complexity has been the subject of intense scrutiny by scientists from many varied backgrounds, which has resulted in the formation of a new field called complex systems science. In addition to providing a better understanding of complexity, this science is generating many new insights on how to cope with complexity, and even how to exploit it. The thread that ties all of these advances together is ‘adaptivity’. Every approach to addressing complexity shares this core: that adaptation is the way to cope with complexity. With the Adaptive Army initiative and Adaptive Campaigning, the Australian Army has recognised the fundamental importance of an adaptive approach. However, the scientific foundation that supports these innovative concepts is not widely appreciated. The purpose of this paper is to provide an accessible introduction to complex systems science, and demonstrate how its principles are reflected in the Australian Army’s adaptive approach.2

Introducing Complex Systems Science

The founding of the Santa Fe Institute (SFI) in 1984 to pursue problem-driven science marked the beginning of complex systems science. The purpose of the SFI is to tackle the really hard problems, ones that do not fit neatly within traditional scientific disciplines. This means the kind of problems complex systems science applies to is not determined by the particular composition of the system (the parts could be atoms, ants or armies) but by the nature of the relationships between the parts. Because of this, the language may seem a bit abstract. However, the abstract and general language used in complex systems has a crucial advantage over traditional scientific discourse. When faced with a messy real world problem, the complex systems lexicon provides an interdisciplinary framework for making sense of the problem that draws on insights from across the sciences. Its abstractness works in our favour because it is better suited to considering the interplay between social and technical components, psychological and physical domains, and quantitative and qualitative approaches. While individual disciplines focus on understanding parts of the system in greater detail, for the purposes of directing military action, comprehensive understanding of the whole system is much more important. The remarkable thing about complex systems science is that it is at the same time highly applied and practical even as it is fundamental.3

Over time, complex systems science has developed a framework of concepts that help to comprehend and explain the dynamics of complex systems. Some of these concepts are summarised in Table 1, below. There is no concise definition of complexity that all complex systems scientists are agreed upon. However, the essence of complexity is related to the amount of variety within the system, as well as how interdependent the different components are. Interdependence means that changes in the system generate many circular ripple effects, while variety means there are many possible alternative states of the system and its parts. Because interdependencies are the result of many interactions over time, complexity is fundamentally a dynamic characteristic of a system. In the table below, the concepts of emergence, self-organisation, autonomous agents, attractors and adaptation all contribute to a deeper understanding of complexity. All are capable of generating novelty—new variety or new patterns that increase the complexity of the system.

Complex systems science studies complex adaptive systems, which are all either living systems, or the products of living systems (such as the Internet). A complex adaptive system is open to flows of energy, matter and information, which flow through networks of both positive and negative feedback. Feedback is a fundamental concept because it marks the difference between linear and non-linear systems. Whereas outputs are always proportional to inputs in linear systems, non-linear systems magnify some inputs (positive feedback) and counteract others (negative feedback).4 Because feedback creates interdependence, it is a source of complexity. Feedback is also the underlying cause of emergence, self-organisation and attractors. For many centuries, most scientists approximated non-linear systems using linear methods, a very useful simplification, but one that only works up to a point.

Table 1: Key concepts of complex systems science

| Concept | Essence | Example |

| Complexity | Variety and interdependence | Amazon rainforest |

| Emergence | Whole different than sum of the parts | Brain is conscious even though individual neurons are not |

| Self-organisation | Increasing order from the bottom up | Flocking birds form intricate patterns |

| Attractor | A point or set of points that attracts all nearby states of a dynamic system | Normal rhythmic beating of the human heart |

| Autonomous Agents | Self-interested agents make local decisions from local information | Peak hour traffic |

| Adaptation | Increasing fit to the environment | Evolution of specialised bird beaks to suit the available food sources |

By acting on local information, autonomous agents within a complex system naturally generate variety, because each agent has a slightly different context. To achieve their goals, agents cooperate and compete with one another, which results in interdependencies and multiple levels of organisation, where agents at one level cooperate to compete better at the next level up. As the agents continually co-adapt to one another, new niches for specialised agents arise, which means their environment is open ended, and too vast and novel to allow the agents to find a permanently optimal strategy. Rather than optimise, the agents must continue to adapt to maintain their fitness—their fit with their environment.

The US Marine Corps were the first warfighting organisation to realise that complex systems science could help to describe the complexity of war. In 1997, the Marine Corps’ primary manual, Warfighting, was updated to incorporate insights from complex systems science:

[W]ar is not governed by the actions or decisions of a single individual in any one place but emerges from the collective behavior of all the individual parts in the system interacting locally in response to local conditions and incomplete information. A military action is not the monolithic execution of a single decision by a single entity but necessarily involves near-countless independent but interrelated decisions and actions being taken simultaneously throughout the organization. Efforts to fully centralize military operations and to exert complete control by a single decisionmaker are inconsistent with the intrinsically complex and distributed nature of war.5

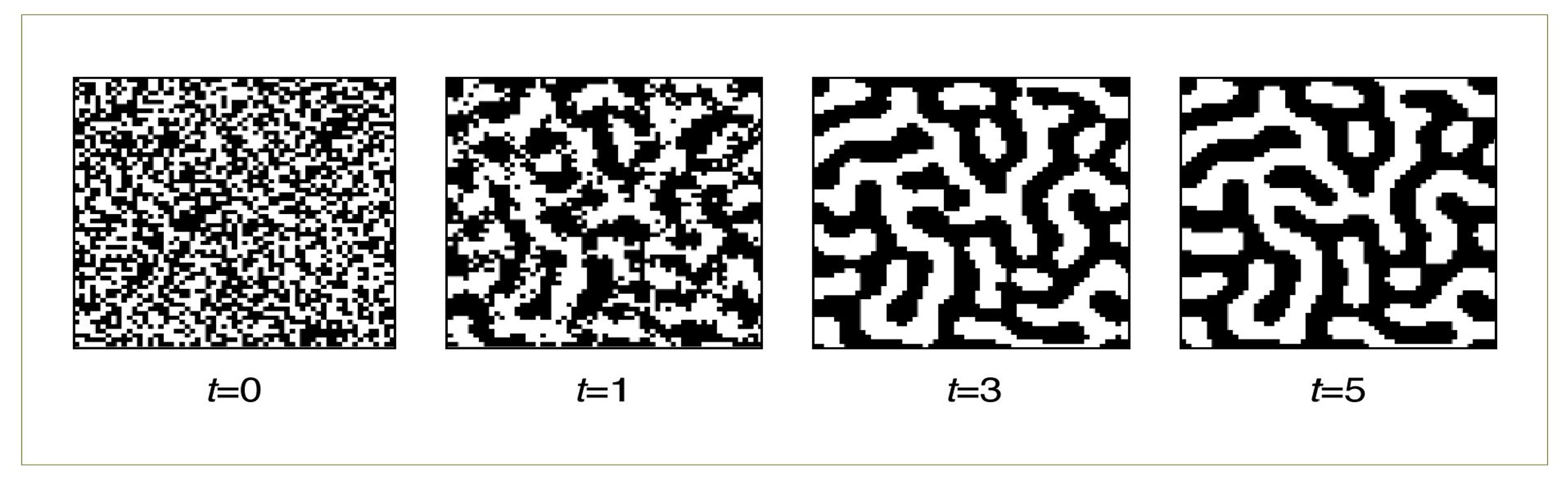

A simple example of how positive and negative feedback drives self-organisation and leads to the emergence of a global pattern is shown in Figure 1. Starting with a random mix of black and white ‘agents’, each agent chooses its colour according to two rules. Short range activation means the agent wants to be the same colour as the majority of its neighbours (this is positive feedback). Long range inhibition means the agent wants to be different than agents that are further away (which provides negative feedback). As a result of local agents making local decisions according to these simple rules, within five time steps a stable global pattern has emerged. This model is more than just an interesting pattern, it has been used to explain phenomena as diverse as the growth and differentiation of the structure of an organism, pattern formation in animal fur, and the clustering of industries in regional economics.6

Australia’s Defence Science and Technology Organisation (DSTO) is leading international research in complex systems science, including The Technical Cooperation Panel (TTCP) action group on Complex Adaptive Systems, chaired by DSTO Research Leader Anne-Marie Grisogono. Defence applications to date include: support to reconstruction operations in Afghanistan; assessing the implications for command and control; application to operational concepts; organisational and force design and structure; new ways of modelling conflict; training for adaptability; and developing a systemic approach to counter improvised explosive devices.

Figure 1. Pattern formation as an example of self-organisation and emergence

These studies and international collaborations have led to a number of more general insights into the nature of complexity and its relevance to defence. The following section will summarise the lessons learned from our experience to date.

Insights From Complex Systems Science

This section describes seven insights from the latest research in complex systems science. Together, these insights demonstrate how complex systems science offers a theoretical foundation, a coherent framework, and a common language for explaining why some approaches to complex warfighting succeed and others fail.

1. Solving Complex Problems Is Fundamentally Different To Solving Complicated Problems

When a Bushmaster breaks down, this is a complicated problem. The best way to solve it is with a subject matter expert—a mechanic—who has detailed knowledge of the vehicle. The problem may not be immediately obvious, but a trained mechanic knows how to go about diagnosing and fixing it. There is a right way for the vehicle to operate, and it is easy to assess whether the vehicle is working or not. The best trick we know for solving complicated problems is decomposition of the whole into parts. By checking different subsystems, the mechanic can isolate the cause of the problem, can take the system apart and reassemble it, and moreover the parts are fixed and interchangeable spare parts exist. Parts are interrelated, but those relationships are effectively static over time. This means the solution does not depend on whether the mechanic is available today, tomorrow, or in two weeks time.

When a Bushmaster breaks down outside a busy marketplace in Tarin Kowt, Afghanistan, this is a complex problem. The appropriate course of action is sensitive to both time and context. Questions about the time of day, recent history of incidents, proximity of backup, area permissiveness, potential threats, and presence of media or video recording equipment must be quickly evaluated and appropriately weighted. The second-order effects of the risks to personnel and civilians from a hostile actor exploiting the situation must be considered. Whether the crew commander decides to let the crew attempt the repair, wait for support or abandon the vehicle, different risks will be incurred with different ramifications for the mission.

It is not appropriate to rely on a subject matter expert to resolve this problem, because deep but narrow expertise does not on its own help to solve an issue that demands a holistic assessment of the context to derive an appropriate response. Nor will decomposing the problem into its components work, because this ignores the trade-offs and relationships between parts. Complex problems cannot be solved using techniques that are successful for complicated problems. While some people are able to naturally cope with complexity, for many they first need to open themselves up to a new mind set, aspects of which are captured within each of the insights below.

2. Warfare Contains Fundamental And Irreducible Uncertainty And Unpredictability

This is hardly a novel insight. Carl von Clausewitz famously wrote:

War is the realm of chance. No other human activity gives it greater scope: no other has such incessant and varied dealings with this intruder. Chance makes everything more uncertain and interferes with the whole course of events.7

He discussed how more information can actually make us more uncertain, and characterised intelligence reports in war as often contradictory or false and mostly uncertain. For Clausewitz, the three sources of unpredictability and uncertainty in war are interaction, friction and chance. Interaction distinguishes war from mechanical arts directed at inanimate matter, because in war ‘the will is directed at an animate object that reacts’. Friction is roughly those factors that differentiate between real war and war on paper. Chance is the tendency within the remarkable trinity (violence, chance, rationality) characterising war that is of most concern to the commander and his army.

Yet in recent years, some military theorists have used ‘Information Age’ science and technology to claim that the sources of uncertainty are not fundamental but stem from limits in the available sensors and communications technology. The most prominent advocate of this position is David Alberts, the US Director of Research for the Office of the Assistant Secretary of Defense for Networks and Information Integration. In their book Network Centric Warfare, Alberts, Garstka and Stein write:

While the Information Age will not eliminate the fog and friction of war, it will surely significantly reduce it, or at the very least change the nature of the uncertainties. We need to rethink the concepts and practices that were born out of a different reality. 8

The new reality of Network-Centric Warfare (NCW) seeks to achieve ‘information superiority’:

As in the commercial sector, information has the dimensions of relevance, accuracy, and timeliness. And as in the commercial sector, the upper limit in the information domain is reached as information relevance, accuracy, and timeliness approach 100 percent. Of course, as in the commercial sector, we may never be able to approach these limits. 9

For Alberts et al, ‘In essence, NCW translates information superiority into combat power by effectively linking knowledgeable entities in the battlespace.’ Whereas Clausewitz saw fundamental differences between war and other professions, Alberts et al argue that ‘the basic dynamics of the value-creation process are domain independent’. While Clausewitz pointed out that more information often increases uncertainty and will be false or contradictory, for Alberts et al, more information is always better and 100 per cent relevant, accurate and timely information is a useful goal.

The insights from complex systems science overwhelmingly support Clausewitz’s view of war, not the NCW thesis. The first person to recognise the link between the non-linear sciences and Clausewitz was Alan Beyerchen. Beyerchen suggested that the notorious difficulties of interpreting On War were at least in part due to the predominance of a linear approach to analysis, when Clausewitz ‘perceived and articulated the nature of war as an energy-consuming phenomenon involving competing and interactive factors, attention to which reveals a messy mix of order and unpredictability’.10 Beyerchen traced deep connections between Clausewitz’s discussions of interaction, friction and chance with key concepts from non-linear science, including positive feedback, instability, entropy and chaos. Beyerchen’s two major conclusions were that an understanding of complex systems (or at least a non-linear intuition) may be a prerequisite for fully understanding Clausewitz, and that the non-linear sciences may help to establish fundamental limits to predictability in war.

All of the concepts described above that contribute to complexity—emergence, selforganisation, autonomous agents, attractors and adaptation—are present in war. All of these sources of complexity generate novelty and surprise. An important implication is that war is fundamentally and irreducibly uncertain and unpredictable. This means that efforts to predict and control in warfare will often only mask the true complexity of the situation, rather than actually reducing or eliminating it. The danger of oversimplifying a complex situation is that actions have unintended consequences that undermine the best of intentions and efforts. In spite of the understandable urge to impose order on chaos, an understanding of complex systems suggests that we would be better served by focusing on exploiting the transformative potential of sources of uncertainty and surprise, to view irreducible uncertainty as an opportunity to disorient the adversary rather than a risk to mitigate.

3. Complex Problems Cross Multiple Scales

Organisations divide up responsibility for solving problems among their members. Most organisations, including the military, employ a hierarchy to separate problems at different scales. Lower echelons of the military hierarchy tend to have a shorter time scale, a faster battle rhythm, and a smaller area of interest.

Higher echelons tend to focus on longer time scales, change more slowly, and focus on a much larger spatial scale, but with reduced resolution. This is a highly effective structure for solving problems that arise at different scales. However, complex problems could be defined as those problems that cannot be solved at a single scale. They require coordination, multiple perspectives, and a systematic response because cross-scale effects interlink problems at different scales. Putting someone in charge does not in itself solve a complex problem, because it does not help address cross-scale effects. We need fundamentally new strategies for solving multi-scale problems. 11

We now know that warfare and terrorism are multi-scale phenomena, because conflict casualties in both cases are described by a power law.12 A power law means that there is no characteristic scale for the system. A power law is a flag for complex behaviour, indicating that there is positive feedback in the system, and meaning that seemingly improbably large events are likely to occur. Power laws are often described as distributions where the rich get richer (wealth distribution was one of the first data sets where a power law was noticed). In warfare, this translates to ‘clumpy’ casualty events—when it rains, it pours. Despite the fact that the mean number killed and wounded by suicide terrorist attacks is 41.11 people, the World Trade Center attack in September 2001 that killed 2749 people is described by the same power law distribution.13 Most suicide terrorist attacks actually have far fewer than forty-one casualties. Unfortunately, knowing the average does not tell us much about systems governed by a power law. Power laws provide one powerful mathematical explanation for Clausewitz’s perceptive observation that more information can lead to greater uncertainty. If height followed a power law instead of the normal distribution (or bell curve), with a global population of six billion people we would expect the tallest person to be over eighty kilometres tall! The multi-scale nature of warfare has a profound effect on how we assess risk, how we gather and interpret information, and how we resolve complex issues.

4. Sources Of Order In Complex Systems Come From The Bottom Up As Well As From The Top Down

Trying to change the culture of an organisation from the top down always generates resistance. While this can be frustrating, it is perfectly natural. Any organisation that is easy to change will not be around for long. Armies are enduring institutions precisely because they are stable and robust to change. Just like organisations, societies exhibit a certain stability, even in dysfunctional and conflict-ridden states. Old patterns of behaviour tend to perpetuate and stubbornly resist improvement. The stability can be understood as a consistent set of interlocking positive and negative feedback loops. Unless these feedback loops are altered, external interventions to improve the situation are likely to be only temporary.

A metaphor is useful for understanding the change in mindset required to transform a system that contains self-organising dynamics.14 A billiard ball on a pool table is (approximately) a linear system. The ball travels in a straight line for a distance proportional to the amount of force applied by the pool cue. Now suppose that the pool table is not flat, but contains many small hills and valleys. If the ball is resting on the top of a hill, it is in an unstable state. The smallest force will move the ball a long way as it runs down the hill and into a valley (this is positive feedback). As the ball rolls around the valley, it is attracted to the lowest point in the valley, where it eventually comes to rest. Now, if the ball is not struck with sufficient energy, it will simply roll back to the attractor (this is negative feedback). The region of all points that roll back to the attractor is known as the basin of attraction. The amount of energy required to escape the basin of attraction depends on the depth of the valley.

In this metaphor, direct control through the formal mechanisms means moving the ball by striking it with the cue. Not understanding the self-organising mechanisms in a system is like playing pool on a rugged pool table and expecting it to be completely smooth. A better understanding of the landscape can help to plot a better path that uses the gradients to advantage. In reality, feedback loops are not usually fixed, but can be changed over time. For the pool table, this means we may have some ability to mould the landscape or tilt the pool table. By lowering a ridgeline, we may be able to decrease dramatically the amount of energy needed to escape the basin of attraction. Once the ball is where we would like it, we can then make sure it stays there by creating a valley and deepening the well at that point. This is what is meant by altering the feedback loops within a dynamic system. In combination, the use of top down and bottom up methods for changing the system’s attractor may be far more effective than either approach alone.

Winning wars often requires changing societies as well as changing oneself. Both require an understanding of the bottom-up, self-organising sources of order and stability in addition to the top-down, formal mechanisms for imposing order. Just as in the billiard ball metaphor, such an understanding helps to identify areas dominated by positive and negative feedback. Areas of positive feedback are highly sensitive to change, which means they are levers for transforming the system. A small injection of energy yields a disproportionately large return on investment within an area of positive feedback. From a systems perspective, these levers are the ‘key terrain’. Areas of negative feedback will resist even large injections of energy. Continuing to push uphill against a deeply entrenched negative feedback loop is not consistent with the military principle of economy of effort. Exploiting an understanding of selforganisation can greatly augment attempts to change the formal structures that are built on top of informal bottom-up processes. Actually changing the feedback loops can lead to even greater influence over the system.

Of course, societies and organisations are much more complex than billiard balls. It is more like we are trying to herd a million billiard balls towards an unknown attractor, over a shifting landscape covered in fog, against deadly opposition, where the billiard balls are constantly moving and interacting—and worse—they have minds of their own. But in this case, the use of a pool cue seems even more futile, and shaping the environment becomes more pivotal to the desired transformation.

The awakening movements in Iraq, which began in Anbar Province in 2005, are a good example of how self-organisation can be exploited during conflict. In 2007, well known US security analyst Anthony Cordesman wrote of Iraq:

If success comes, it will not be because the new strategy President Bush announced in January succeeded, or through the development of Iraqi security forces at the planned rate. It will come because of the new, spontaneous rise of local forces willing to attack and resist Al Qa’ida, and because new levels of political conciliation and economic stability occur at a pace dictated more by Iraqi political dynamics than the result of US pressure.15

By funding the salaries of ad-hoc coalitions based on organic informal power structures between tribal Sheikhs, the United States was able to amplify a natural source of resistance to al-Qaeda within the system. Although the rise in power of armed militias may present longer term challenges to state-building, this set of problems is still preferable to the problems facing Iraq prior to the Sunni uprising against al-Qaeda in 2005.

5. ADAPTATION IS THE BEST WAY TO COPE WITH COMPLEXITY

If the challenges of complexity start to seem overwhelming, it is important to remember the endless source of inspiration we have for how to thrive in the face of complexity: life. In Jurassic Park, the fictional mathematician Ian Malcolm, a chaos and complexity theorist, remarked that ‘the history of evolution is that life escapes all barriers. Life breaks free. Life expands to new territories. Painfully, perhaps even dangerously. But life finds a way.’16 Malcolm is describing the unprecedented capacity of life for adaptation. Many valuable lessons on adaptation can be found within the life sciences.

The adaptive immune system is a vast distributed network of autonomous agents defending the organism against a wide variety of continually evolving pathogens. It must first perform the function the military calls ‘identification friend and foe’ to distinguish between self and non-self molecules. Some pathogens, like the parasites that cause malaria, actively evade detection by following Mao’s dictum that the guerrilla must move amongst the people as a fish swims in the sea (immunologists call this ‘intracellular pathogenesis’). Others use deception to misdirect the immune response. Pathogens engage in black market racketeering (technically they use a ‘type III secretion system’) by building hollow tubes to extract proteins from the host, which they then use to shut down defences. The immune system is able to recognise novelty, develop innovative counters, upgrade and update the agents, mass effects and intensify concentrations of the best agents to cope with the identified threat, and maintain a long-term memory that triggers a strong response if the same pathogen is encountered again. Perhaps even more impressively, it does all of this without any central guidance or control.

Turning to evolution, organisations can learn from organisms how to better target innovation while preserving essential functions, in order to better anticipate and prepare for future challenges and opportunities. Scientists used to assume that Darwinian evolution was a ‘blind’ process driven by random mutation. Recently, molecular biologists have found evidence that variation is far from random, and have hypothesised that genomes actually anticipate challenges and opportunities in their environment. The evolution of ‘evolvability’ describes how genomes develop ‘strategies’ that accelerate the rate of evolution by carefully targeting variation. For example, there are mechanisms that focus variation to create ‘hot spots’ of genetic change, and useful segments of DNA can be identified and reused to create ‘interchangeable parts’.17 The genome appears capable of modulating evolution to better preserve critical functions in tact while focusing variation in those regions most often exposed to environmental flux. Understanding how to evolve the mechanisms of evolution has profound implications for the military. By applying adaptation to the adaptation process itself, second order adaptation would enable the military to get better at adapting over time.

In between the rapid adaptation of the immune system and the slow evolution of species, biology is full of systems that learn at both the individual and collective level. One study of learning of particular relevance to defence is Dörner’s experiments in the psychology of complex decision-making.18 Dörner’s method consists of immersing participants in a complex microworld simulation, where all of the variables have interdependencies and are connected by feedback loops. For example, in one microworld the participants were installed as the mayor of a fictional town called Greenvale. Dörner found that even with dictatorial powers and the best of intentions, most—but not all—participants failed miserably. This prompted Dörner to attempt to distinguish between good and poor actor behaviour, identify the common cognitive traps that complex problems present, and develop a theory of how emotions impair rational decision-making under stress. Recent experimentation in Australia between DSTO, the Army and the University of South Australia, and international collaboration with the School of Advanced Military Studies and the Institute for Defense Analyses in the United States, are examining whether it is possible to improve adaptability through mentored game playing using Dörner’s microworlds. The hypothesis is that a combination of theory, practice and reflection can help to improve learning within a complex situation, thereby enhancing individual adaptability.

In spite of the vast differences between these examples, a simple model captures the basic form of all adaptive mechanisms. VSR stands for variation and selective retention.19 Without an internal or external source of variation, there is no possibility of change, so variation is an essential prerequisite for adaptation. Selective retention inhibits some variants (negative feedback) and reinforces others (positive feedback) with a bias towards retaining fitter variants. In simple terms, adaptation is nothing more than a principled and sustained application of trial and the elimination of error.

6. Adaptation Requires Continual Refinement Of System-Level Trade-Offs

If adaptation is the best way to cope with complexity, then how do we get more of it? Unfortunately, adaptation is not something you can just buy off the shelf. Adaptability is not contained in any single component, and it cannot be separated from the other functions of the system. The sources of variation, selection and retention must permeate the organisation and be connected in the right ways. Adaptation is a sort of ‘ghost in the machine’, or in the terminology of complex systems science, adaptability is an emergent property. If we want to improve adaptability, we need to take a systemic approach. This means understanding the system as a whole, in the context of its environment and its purpose, and deliberately considering trade-offs in the way the system is organised.

Trade-offs exist because there is no one right way to organise a system. The best way to organise depends on the context, which is in constant flux. There are a number of trade-offs that have been identified within complex systems science. Most of them are not new. There are, however, two main differences in how trade-offs are treated from a complex systems perspective.

Firstly, a complex systems approach insists on applying trade-offs to the system as a whole. This is because a system that is adaptive at one level may be brittle and unresponsive at the next level up. For example, a high rate of individual learning is no guarantee that any organisational learning is taking place. If force adaptivity is the real requirement, then trade-offs must be considered at the force level and not constrained to components of the force. While this guideline sets the scale for assessing the relevant system qualities, it by no means limits interventions to the same scale. As our third insight emphasised, the multi-scale nature of complex systems means that in order to achieve adaptivity at the scale of the whole system, interdependencies across all scales of the system must be considered.

Secondly, complex systems science takes a multidimensional approach to managing trade-offs. Consider the trade-off between cooperation and competition. This is often treated as a zero sum game. If you want to make an industry more competitive, according to conventional wisdom, you have to limit cooperation. The trade-off consists of selecting a point along the spectrum from pure competition to pure cooperation. However, this spectrum is a simplification. In reality, there are many different ways to combine competition and cooperation. These combinations differ across multiple dimensions, and the trade-off between competition and cooperation is not pitting one against the other, but rather how to navigate within this multidimensional space. The trade space contains possibilities where competition and cooperation are synergetic and mutually reinforcing. Yaneer Bar-Yam exemplifies this complex systems perspective when he explains competition and cooperation in a team sport like basketball from a multi-level perspective.20 Players compete for places in the basketball team. The team then cooperates to compete against the opponent. Teams compete, but they also cooperate as a league to compete for viewers against other sports. Even though sports compete for viewers, they cooperate on issues like anti-doping to compete with other forms of entertainment. In this example, provided competition and cooperation are carefully separated in time, cooperation at one level improves the quality of competition at the next level up.

A list of complex systems design trade-offs is provided in Table 2. Each of these trade-offs takes place in a multidimensional trade space, and they are not independent of one another. It is beyond the scope of this paper to provide a detailed discussion of these trade-offs. They are included to make the point that in spite of the simplicity of the VSR model of adaptation, there are many subtle details that influence how adaptive a system is in practice. This is good news, because it suggests many ways for improving the adaptability of the force.

The question that Table 2 raises is how do we know where to be in trade space for all of these interdependent factors in the light of the irreducible uncertainty and unpredictability of war? An answer from the discussion of the fifth insight above is to apply adaptation to the process of becoming more adaptive.

Table 2. Trade-offs in complex systems design

| Trade-off | Description | |

| Adapted | Adaptability | Adapted to current context or adaptable to future contexts |

| Exploration | Exploitation | Exploit the current best strategy or explore alternatives |

| Competition | Cooperation | Agents compete to achieve individual goals or cooperate to achieve a shared goal |

| Independence | Interdependence | Agents separated to maintain independence or connected to create interdependence |

| Innovation | Integration | Organisational orientation towards innovation and creativity or integration and control |

| Bottom up | Top down | Decision-making and change initiated from the top of the hierarchy down or from the bottom up |

| Decentralised | Centralised | Control is centrally coordinated or independently implemented in parallel |

| Specialisation | Multitasking | Agents are heterogeneous and highly specialised or homogeneous and able to perform multiple functions |

| Induction | Deduction | Agents act on rules generalised from past experience or by deducing logical consequences of assumptions |

| Deterministic | Random | The system’s behaviour is completely determined by the input or uniformly random regardless of the input |

| Chaos | Order | System is unstable and changes quickly or system is stable, ordered and robust to perturbation |

This means to beadaptive, we must be able to continually refine these system-level trade-offs. However, it should be noted that this prescription rolls off the tongue far easier than it is implemented in practice. Resourcing is an obvious hurdle, but probably more serious is the ability to implement desired changes in force level design, when moving in trade space has implications that cut across programs and services at multiple scales. This affects decisions owned by many individuals who cannot simply be compelled to change their internal policies. The difficulty of this challenge, however, in no way reduces the importance of force level adaptivity as an objective.

7. It Is Easier To Design Environments That Foster Adaptation Than To Directly Impose It

The paradox that confounded effects-based operations was that complexity increases the incidence of second and third order effects (because of interdependence) while simultaneously decreasing our ability to predict those effects (because of novelty-generating mechanisms). The way to resolve this apparent paradox is to realise that prediction is neither particularly useful nor necessary for effective interventions in a complex adaptive system.

Once again, a metaphor will help illustrate the change in mind set towards harnessing a complex adaptive system. Consider the difference between throwing a bird and throwing a rock.21 A rock follows the laws of physics, which allows prediction of the trajectory of the rock based on knowledge of the angle and velocity of the throw (the initial conditions). In contrast, knowing the initial conditions when a bird is thrown does not help to predict the trajectory or final destination of the bird. To restore predictability, we could tie the wings of the bird, so that it behaves more like a rock, but this seeks to control complexity by eliminating it. Instead, if we provide an attractor for the bird, such as a feeder, and some boundaries, such as a fence, then the bird may end up where we want it, even though we cannot predict how it will get there. Better yet, by training the bird to associate its goals with the owner’s goals, falconry takes advantage of the learning capacity of the bird to perform dazzling feats, catch prey and return to hand.22

Predictability is important for the control of complicated systems. However, complex adaptive systems contain goal-directed autonomous agents, which are already capable of controlling themselves. In our discussion of the fourth insight above, we raised the possibility of changing the landscape of the environment using informal mechanisms. Changing the environment of a complex adaptive system modifies the distribution of incentives, which encourages different patterns of behaviour, and is a more indirect way of influencing the system. If the goals of the agents are understood, incentive modification may be a far more effective way of transforming patterns of behaviour than attempting prediction-based control. This requires a change in mindset away from trying to impose order on chaos towards harnessing complexity.

We are so deeply immersed in a torrent of incentive modifications that sometimes we barely notice them. Every time we turn on the television, drive to work, or engage in social interaction we are subject to incentive modifications. Free to air television creates a compelling incentive for us to watch advertisements, as well as associating the advertised product with enjoyment. City streets would be highly lethal if not for road rules in combination with the threat of their enforcement, which provides disincentives for socially irresponsible behaviour. One only has to compare the behaviour of a small child with that of an adult in an exclusive restaurant to notice the force of social pressures the child is oblivious to but that almost any adult has learned to observe. Every social situation has norms associated with it, and actions that violate those norms (tantrums, nudity, honesty regarding uncomfortable truths) are often met with a severe response.23 In a society that respects individual freedom and autonomy, incentive modification is a ubiquitous alternative to direct control, encompassing both formal (the legal system) and informal (social norms) mechanisms for influence.

In military affairs, the use of incentive modification is especially important in what is now called stability operations. Following his experience in the Philippines, Lieutenant Colonel Robert Bullard wrote of the importance of a judicious mixture of force and persuasion:

This makes clearer the complex nature of pacification as a compromise between force and persuasion, rights and ideals, rude dictation and policy, and this complexity is what makes pacification difficult.24

Force alone will not bring stability, but neither can persuasion. It is producing the right combination of force and persuasion that is the art of stability operations.

This discussion provides one approach to resolving the difficulties that were raised within the sixth insight on system-level trade-offs. Rather than seeing the independent decision-makers within the system as an impediment to achieving force-level adaptivity, we could view them as the crucial engine for adaptation.

In order to move in design trade space, we would shape their behaviour by indirect means, by designing their environment and incentive structure, to promote coupling between their adaptation to the local context and overall force adaptivity.

In summary, complicated problems are problems where the ‘devil is in the details’ and the details are best managed by decomposing the problem into smaller pieces. Complex problems are caused by variety and interdependence, cross multiple scales and generate novelty. They resist solution by templating and trying to break them up ignores interdependencies, generating unintended consequences. Solving complex problems requires an adaptive approach across multiple scales that actively shapes the environment, manages system-level trade-offs and exploits self-organisation and emergence.

The Connection Between Adaptive Campaigning And Complex Systems Science

This discussion refers to the Adaptive Army initiative and the 2006 version of Adaptive Campaigning, which at the time of writing is the current endorsed future land operating concept. The ‘Adaption Cycle’ is probably the most recognisable and most discussed feature of adaptive campaigning. The Adaption Cycle views conflict as a complex adaptive system and describes a cycle of interaction intended partly to change the system and partly to learn. The Adaption Cycle consists of four steps: Act, Sense, Decide, Adapt. The first step is action, because adaptation is proactive rather than reactive, and assumes that action will always occur in the face of uncertainty and the emergence of novelty. Action stimulates the system, which generates a response, such as forcing the adversary to unmask from below the discrimination threshold. The response provides information about the system, which is the basis for decisions. The final step, adapt, emphasises that every action is a learning opportunity, consequently the Land Force may need to adapt. The adapt step explicitly considers learning how to learn, which we described above as second-order adaptation.

There have been misconceptions that the Adaption Cycle advocates acting before any surveillance or planning, and is an inferior and unnecessary variant of John Boyd’s famous Observe Orient Decide Adapt (OODA) loop.25 The first point is addressed by Lieutenant Colonel Chris Smith’s article in this issue. The second misconception confuses the tactical focus of the OODA loop, born out of the experience of one-on-one duels of fighter pilots, with the more strategic ability to adapt. Whereas the OODA loop underpins a faster decision cycle, the Adaption Cycle promotes a faster learning cycle. As William Owen notes,

Just like learning to drive, speeding around the OODA loop will get you killed as surely as speeding on the road. What is more, the OODA loop urges you to speed in the fog of war and on the icy road of chance!26

As simple models that emphasise the central importance of cyclical interaction and the temporal dimension of war, both OODA and ASDA have some explanatory power. However, it could be argued that the effectiveness of both models in capturing public attention has only inhibited discussion of the more important contributions of Boyd and those contained within Adaptive Campaigning.

Adaptive Campaigning is aware of and situated within a whole-of-government context, which promotes a systemic solution that integrates the elements of national power. It recognises the central role of influencing and controlling people and perceptions. Adaptive Campaigning introduces five interdependent and mutually reinforcing conceptual lines of operation (LOOs), recognising the need for concurrency in responding to complexity. The indigenous capacity LOO attacks the feedback loops within the system to ensure success is not transient, and promotes the formation of a stable self-regulating system.

Adaptive Campaigning explicitly incorporates a number of concepts from the science of complex systems, explaining them in practical terms. An example is the taxonomy of adaptivity: operational flexibility, operational agility, operational resilience and operational responsiveness.27 Adaptive Campaigning also recognises the trade-off between large scale effects and fine scale complexity described within complex systems science.28 It articulates a symbiotic relationship between mission command and adaptive action. Mission command provides scope for subordinate commanders to apply judgement, while adaptive action exploits this freedom to better adapt to the local context.

Perhaps the biggest strength of Adaptive Campaigning is the recognition of the logical implications of an adaptive approach. The need for a new approach to the planning and design of operations is identified. Adaptive Campaigning also calls for a culture of adaptation. It describes an aspirational Army culture where education is valued, training environments are complex and ambiguous, a premium is placed on lessons learned, challenging understanding and perceptions is encouraged, and mistakes are acknowledged so they can be learned from.

Conclusion

The publication of Complex Warfighting in 2004 marked the recognition of the complexity of the operational environment by the Australian Army. In 2006, Adaptive Campaigning moved beyond admiring the problem to advance adaptation as the way to cope with complexity. 2008 saw the release of the Adaptive Army initiative, which extended the application of adaptation from operations to the way the force is structured. All of these documents are based on a deep understanding of complex systems science, integrated with an appreciation of national security policy, contemporary operational experience and military history.

This paper has presented seven insights from the forefront of complex systems science. Together, these insights open up a new paradigm that is significantly different to our traditional problem solving approach. The Joint Military Appreciation Process (JMAP) provides a systematic, analytical and highly structured process for rational decision making. The JMAP is extremely well suited to solving complicated problems. Complex problems cannot be approached in the same way as complicated problems. Complex problems require holistic multi-scale understanding, iterative adaptation, leveraging informal mechanisms, exploiting emergence, and shaping the environment. This is not as hard as it sounds, for many commanders and staff already do this to some degree without explaining it in these terms. However, we will not see the full power of this approach unless it is documented, trained and made widely available to the force.

Looking ahead, there is much work to be done to capitalise on the promising first steps made under the Adaptive Army initiative. The Australian Army needs to articulate the new approach to planning and designing operations called for in Adaptive Campaigning It needs to review existing doctrine and define the conditions when linear mechanical processes are good enough and—more importantly—when they are not. If both linear and non-linear techniques have a place, which they should, then boundaries and transitions between approaches need to be specified to avoid confusion. Finally, the Australian Army needs to institutionalise an adaptive approach across all of the fundamental inputs to capability if it is serious about developing a culture of adaptation.

Endnotes

1 Eliot Cohen and John Gooch, Military Misfortunes: The Anatomy of Failure in War, Free Press, New York, 1991, p. 94.

2 For a more detailed discussion of the application of complex systems to military problems see Alex J Ryan, Military applications of complex systems, in Philosophy of Complex Systems, Dov M Gabbay, Cliff Hooker, Paul Thagard, John Collier and John Woods (eds), Elsever, Amsterdam, 2010.

3 Yaneer Bar-Yam, Making Things Work: Solving Complex Problems in a Complex World, NECSI Knowledge Press, Boston, 2004, p. 18.

4 Technically, mathematicians define a system as linear if and only if f(a+b)=f(a)+f(b). Every system with feedback violates this condition.

5 Headquarters US Marine Corps, Marine Corps Doctrinal Publication 1, Warfighting, US Government Printing Office, Washington DC, 1997.

6 Alan M Turing, ‘The Chemical Basis of Morphogenesis’, Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, Vol. 237, No. 641, 1952, pp. 37-72; BN Nagorcka and JR Mooney, ‘From stripes to spots: prepatterns which can be produced in the skin by a reaction-diffusion system’, IMA Journal of Mathematics Applied in Medicine and Biology, Vol. 9, No. 4, pp. 249-67; Paul Krugman, ‘A Dynamic Spatial Model’, National Bureau Of Economic Research, Working Paper No. 4219, Cambridge, 1992.

7 Carl von Clausewitz, On War, Michael Howard and Peter Paret (ed. and trans.), Princeton University Press, Princeton, 1976, p. 101.

8 David S Alberts, John Garstka and Frederick P Stein, Network Centric Warfare: Developing and Leveraging Information Superiority, National Defense University Press, Washington DC, 1999, pp. 72.

9 Ibid., pp. 54-55.

10 Alan D Beyerchen, Clausewitz, Nonlinearity and the Unpredictability of War, International Security, Vol. 17, No. 3, 1992.

11 Yaneer Bar-Yam, Making Things Work, p. 14.

12 Lewis F Richardson, Statistics of Deadly Quarrels, Boxwood Press, Pittsburgh, 1960; Aaron Clauset, Maxwell Young and Kristian S Gledistch, ‘On the Frequency of Severe Terrorist Events’, Journal of Conflict Resolution, Vol. 51, No. 1, pp. 58-88, 2007.

13 Ibid.

14 A related metaphor is used to explain resistance to international development interventions in Yaneer Bar-Yam, Making Things Work, p. 211.

15 Anthony H Cordesman and Elizabeth Detwiler, Iraq’s Insurgency and Civil Violence Developments through Late August 2007, Center for Strategic and International Studies, Washington DC, 2007, p. 2.

16 Michael Crichton, Jurassic Park: A Novel, New York, Knopf, 1990, p. 159.

17 Lynn H Caporale, Darwin in the Genome Molecular Strategies in Biological Evolution, McGraw-Hill, New York, 2003, p. 4.

18 Dietrich Dörner, The Logic of Failure: Why Things Go Wrong and What We Can Do to Make Them Right, Metropolitan Books, New York, 1996.

19 Donald T Campbell, ‘Blind variation and selective retention in creative thought as in other knowledge processes’, Psychological Review, Vol. 67, 1960, pp. 380-400.

20 Yaneer Bar-Yam, Making Things Work.

21 The bird-rock metaphor is inspired by a description of the physics of flight by Richard Dawkins in The Blind Watchmaker, created by Paul Plsek, and explained in Tom Bentley and James Wilsdon, The Adaptive State: Strategies for Personalising the Public Realm, Demos, London, 2003.

22 John Hartley, ‘From Creative Industries to Creative Economy: Flying Like a Well-Thrown Bird?’ [in Chinese] in John Hartley (eds), Creative Industries, Tsinghua University Press, Hong Kong, 2007, pp. 5-18.

23 One of eleven candidate definitions of culture given in Kluckhohn’s classic introduction to anthropology is a mechanism for the normative regulation of behaviour. Clyde Kluckhohn, Mirror for Man, McGraw-Hill, New York, 1949.

24 Robert L Bullard, ‘Military Pacification’, Journal of The Military Service Institution of the United States, XLVI (CLXIII), 1910, p. 5.

25 Charles Dockery, ‘Adaptive Campaigning: One Marine’s Perspective’, Australian Army Journal, Vol. III, No. 3, 2008, pp. 107-18.

26 William Owen, Are We That Stupid?, Proceedings of the Land Warfare Conference, Adelaide, 2007.

27 A-M Grisogono, ‘Co-Adaptation, in SPIE Symposium on Microelectronics, MEMS and Nanotechnology, Brisbane, 2005.

28 Alex J Ryan, ‘About the bears and the bees: Adaptive approaches to asymmetric warfare’, Interjournal, 2006.