Overcoming Challenges for Strategic Advantage

Contemporary technological advancements in the field of artificial intelligence (AI) bring to the forefront of global discussion the possibility of increasingly integrating adaptive AI technologies into battlefield operations. This discussion frequently takes the position that AI and autonomous technologies may revolutionise warfare and deliver asymmetric advantage to nations that utilise them effectively.[1] The often-proposed benefits of military AI and autonomy are numerous, including decision advantage, the safer and more efficient utilisation of personnel, the ability to generate mass and scalable effects, and enhanced force projection.[2] However, the greatest advantage of AI and autonomy for states and their militaries may be to unlock capabilities that can continually adapt to evolving operational and tactical problems, using innovation to seize the initiative and disrupt adversaries with transient technological advantages.[3]

There are unique complexities in the land domain that mitigate against the introduction of novel technological warfighting systems. This is because, unlike in the domains of maritime, air, space and cyberspace, non-AI-enabled software struggles to scale to the unbounded number of possible terrain-based and human variables in the land domain. AI and AI-enabled autonomous systems have particular utility in land operations because they can ‘learn’ how to navigate uncertainty, a characteristic which adversaries will struggle to overcome.[4] AI technology therefore presents significant potential for nations that are able to create and capitalise upon technological progress to deploy effective land-domain AI-enabled systems at scale. The possibility for these capabilities to contribute to Australia’s strategic priorities prompts the question: how can the Australian Army effectively operationalise AI in the land domain in order to maximise the strategic advantage it will provide in a future conflict?

This article explains the unique benefits and challenges to the Australian Defence Force (ADF) in its efforts to develop and use operational AI in the land domain. It argues that these unique factors are significant enough to warrant domain-specific governance considerations to ensure consistency with the broader Australian defence landscape. However, for the ADF to gain and maintain asymmetric advantage in the land domain using AI and AI-enabled autonomy, the technology needs to be reliable.[5] Armies, including the Australian Army (Army), risk operating at reduced effectiveness if the governance processes that influence land domain AI’s development and use are ill-suited to the operational context. After all, a commander will not use an AI-enabled system unless confident that its use will support achievement of the mission.

This article defines AI as ‘a collection of techniques and technologies that demonstrate behaviour and automate functions that are typically associated with, or exceed the capacity of, human intelligence’.[6] AI technologies, and their integration with human command and control, will be the primary driver of robotics and autonomous systems (RAS), enabling systems to reliably perform tasks or functions without (or with limited) direct human control, while continually adapting in highly dynamic environments.[7]

This article seeks to inform future efforts to apply and use AI technologies in the land domain, as well as related policy and governance frameworks. While significant progress has already been made in the research, development and demonstration of AI technologies, attention must now shift to the challenge of integrating AI within command and control architectures and developing governance mechanisms to enable its use in land domain operations.

[1] Michael C Horowitz, ‘Artificial Intelligence, International Competition, and the Balance of Power’, Texas National Security Review 1, no. 3 (2018): 38.

[2] Australian Army, Robotics and Autonomous Systems Strategy v2.0 (Canberra: Army HQ, 2022), pp. 7–18.

[3] Ash Rossiter and Peter Layton, Warfare in the Robotics Age (Boulder: Lynne Rienner Publishers, 2024), pp. 146–149, 162–163.

[4] Ibid., p. 33.

[5] Austin Wyatt, Joanne Nicholson, Marigold Black and Andrew Dowse, Understanding How to Scale and Accelerate the Adoption of Robotic and Autonomous Systems into Deployable Capability, Australian Army Occasional Paper No. 20 (Canberra: Australian Army Research Centre, 2024).

[6] Australian Army, Robotics and Autonomous Systems Strategy, p. 4.

[7] Ibid., p. 5.

Much of the existing literature on AI across industries and academic fields has focused on its emergent properties and underlying technological advancements. There is typically less focus on the effective integration of AI and other emerging technologies into practical application.[8] This is despite the importance of such considerations to military success. Specifically, recent conflicts in Syria, Ukraine, Libya and Nagorno-Karabakh have demonstrated that the ability to integrate novel technologies (such as unmanned systems) within unique operational contexts is critical to the generation of asymmetric advantage.[9]

This article expands on previous Army research on military use of AI. Notably, Dr Austin Wyatt et al. identified six distinct contextual barriers to mass and scalable adoption of these warfighting technologies across various nation states, including cultural aversion and resource limitations.[10] While past research has identified these barriers, it has involved comparatively little consideration of the specific challenges that the land domain poses to the development and use of operational AI systems. This omission is particularly evident in the lack of available policy, governance, strategic and technical considerations to inform operational decision-makers such as commanders. This article analyses the potential for AI to contribute to Army’s future operations in the land domain and offers some recommendations and opportunities to bridge the gap in existing governance frameworks.

This article synthesises information and explores both the potential advantages and consequences of Army’s adoption of AI in the land domain. The research method involved a comprehensive review of open-source literature at the unclassified level. The research focused on AI technology and its particular relevance to Army operations within the land domain. A literature review was conducted, across a variety of qualitative and quantitative research, involving a rigorous process of searching, review and analysis. Among other material, this review examined defence policy, governance, strategy and regulation relevant to the use of AI. Further, the research benefitted from many conversations with policy, operational and technical subject matter experts in the Defence environment and within academia to assist in contextualising the research for an Australian, ADF and Army audience. Research for this article was completed in late 2024.

Findings from this research are consolidated into the Considerations for AI Governance within Land Operations included after the main body of the article. It provides suggestions to Army personnel at varying levels of command that, if applied, may improve decisions concerning the application and use of land domain AI. Specifically, it elicits potential assumptions about the operational application and use of AI. In doing so, it provides one potential means for commanders to develop an understanding of the system and to make decisions about its use. These considerations are suggestions from the author, and are not official policy or guidance on this matter.

This article, and the included Considerations for AI Governance, is intended to provide a springboard for further consideration of contextual factors constraining the effective development and deployment of land domain AI systems. In this regard, the findings presented here are not intended to be exhaustive. Future research will need to focus on the interaction between the land domain, AI development and deployment processes, and Defence’s test and evaluation processes. It will also need to consider factors relating to data governance and management, accountability mechanisms, international and national law and policy, as well as the priorities and policies of AUKUS partners.

This article provides the foundation for the further research that is needed to generate a clear picture of how best to establish and govern land domain AI in order to deploy effective, trustworthy, reliable and legally compliant land AI systems.

[8] Horowitz, ‘Artificial Intelligence, International Competition, and the Balance of Power’, p. 38.

[9] Heiko Borchert, Torben Schütz and Joseph Verbovszky, Beware the Hype: What Military Conflicts in Ukraine, Syria, Libya, and Nagorno-Karabakh (Don’t) Tell Us about the Future of War (Hamburg: Defence AI Observatory, 2021), p. 62.

[10] Wyatt et al., Understanding How to Scale and Accelerate the Adoption of Robotic and Autonomous Systems.

Developing and implementing an effective land domain AI governance framework will require significant upfront investment. However, it is critical to enhancing the land power of those militaries capable of strategically appropriate and responsible development and exploitation of this rapidly advancing technology. The integration of AI into Australia’s land forces would deliver several advantages if executed effectively.

If realised, AI-enabled land systems have the potential to increase Army’s efficiency and effectiveness without requiring a commensurate expansion in personnel numbers.[11] Small and inexpensive AI-enabled capabilities can generate mass and scalable effects[12] when numerous systems operate as a swarm in pursuit of a shared objective. Importantly, one human operator can oversee the function of many autonomous systems, thereby reducing the number of Army staff that must be deployed in potentially dangerous operational conditions to achieve positive results at scale.[13] This process of integrating soldiers and remote autonomous systems leverages the relative strengths of people and AI-enabled machines to bolster the effectiveness of both.[14] The term used to refer to such systems is human-machine teaming (HMT).

The force multiplier benefit of HMT is particularly significant given Army’s modest size of under 45,000, in comparison to the much larger armies of many regional powers.[15] AI capabilities therefore have the potential to enable Army to contend with numerically larger threats.[16] One area in which AI shows particular potential is in generating appropriate responses to unchanging, repetitive tasks that align closely with training data.[17] For example, Army recently demonstrated leader-follower vehicle technologies that enable a single driver to transport a convoy of autonomous trucks.[18] Utilising this type of technology at scale could allow Army to redirect its workforce from simple, repetitive tasks to fill more complex roles. Such a personnel redistribution may help combat recruitment and retention challenges.[19]

By combining AI with advanced manufacturing in contested environments, Army could achieve more effective and humanitarian operational results by incorporating a strategy of ‘robotic first contact’. This method refers to using consumable or single-use AI-enabled systems to make first contact with a potential adversary. Such an approach opens up new courses of action that would be unavailable to a commander if the threat posed were to personnel. In this regard, a commander could send a disposable robotic system (that lacks self-preservation instincts) into situations of ambiguous hostility, and risk it being destroyed by delaying kinetic action until a foe takes hostile action first. This ‘shoot-second’ approach to initial engagement is a uniquely potent capability in the land domain and may improve both military and humanitarian outcomes, particularly when operations are conducted within close proximity to civilian populations.[20] Equally, a commander could choose to intentionally delay contact with the enemy or slow the operational tempo until adversaries reveals themselves and their combatant status can be confirmed. These characteristics of AI-enabled systems may be particularly beneficial in close-quarter combat or during urban operations where there are greater risks to civilians.

Beyond increasing tactical options for military commanders, AI-enabled systems have the potential to change the battlefield landscape as a whole. They offer new ways for militaries to manage dangerous battlefields, even when the threat is not directly related to enemy engagement. For example, AI-enabled capabilities can create distance from an adversary to enable safer Army engagement with dangerous or potentially dangerous scenarios. They can also conduct explosive ordnance disposal or operations within radioactive environments far more safely than humans. As a corollary, the proliferation of AI-enabled land systems may foreshadow the expansion of battlefields by enabling operations in territory that human soldiers cannot access safely. Because the risk of losing robotic systems is more tolerable than losing people, the technology delivers greater strategic and operational flexibility in military decision-making.[21]

Given that the force protection considerations are so much lower for AI-enabled systems than for conventional capabilities, they offer novel potential design and functional possibilities. For example, an autonomous system does not have to carry and protect an internal crew. It could therefore be designed to be smaller with less armour, enabling easier transport and deployment across theatres at speed and scale, and reducing its signature. AI and autonomy also present the opportunity to enhance or augment existing platforms or roboticised capabilities. This could possibly extend the life of a capability system, or create other unique advantages, compared to non-AI-enabled platforms.[22]

AI-enabled systems are also likely to increase the tempo of land warfare. AI has the potential to enable the rapid collection, analysis and sharing of significant quantities of battlefield data. This reduces the cognitive burden and data overload faced by personnel, which can give a commander decision advantage over an opponent. The ability presented by AI to enable faster and more efficient data processing, exploitation and dissemination could significantly enhance military preparedness, operations, advanced targeting capabilities and more besides.[23] These factors, combined with changes to the battlefield landscape, the ability for one operator to command many AI-enabled systems, and options for automating simpler tasks, are all expected to increase the tempo of operations.

The environmental complexity of the land domain already presents a high-tempo operational environment. AI systems therefore present a potent means of capitalising on the pace of the battlefield, enhancing Army’s ability to seize initiative, increasing the speed of kill chains, and enabling more effective responses to (or pre-empting of) the adversary’s decision-making.[24] Those who leverage these technologies to accelerate the tempo of warfare in their favour (‘owning time’) are positioned to achieve advantage against adversaries without the technological capacity or preparedness to keep up.[25]

Land domain AI systems require an inherent level of flexibility and adaptability in their logic algorithms to effectively respond to the complexity and variability of the land domain. It is impractical to program software with a specific response to each of the practically infinite variations of input within the land domain. Therefore, traditional software approaches, where each input is explicitly coded for, do not offer this required flexibility.[26] However, developments in machine learning (ML) algorithms that recognise, analyse and generalise patterns and correlations from input data have realised the required level of adaptability and flexibility.[27] ML enables AI to respond effectively to a broader range of inputs by applying previously observed common features and patterns to new scenarios, rather than humans having to code responses to each possible input.[28]

Despite the potential operational flexibility created by AI technology, many commentators nevertheless contend that various technical, organisational, institutional and cultural limitations curtail the capacity of AI to revolutionise or even enhance current means of warfighting. Among the most prominent causes for cynicism is the tension between the desire of militaries to understand and predict the tools of warfare they command, and an incapacity of humans to easily explain the chain of ML-generated logic responsible for AI outputs.[29] This tension is further exacerbated by modern AI models, the outputs of which may vary depending on contextual minutia or their own self-adjusted ML algorithms.[30] The ability to train ML algorithms to produce reliable and predictable outputs for military applications depends on access to operational datasets of substantial size. Critics contend that such datasets ‘often do not exist in the military realm’.[31] Further, sharing such outputs with industry will often be restricted by information security policies.[32]

Similarly, analysis of contemporary military operations suggests that AI technology must be incorporated within the organisational structure if it is to play a significant role in military operations. As demonstrated by the modern conflicts in Ukraine, Libya, Syria and the Caucasus region, warfighting systems that utilise novel technologies are, by themselves, insufficient to change how militaries operate. Rather, these novel systems are most effective when implemented well within the existing cultural and organisational contexts of the militaries that use them.[33] This requires commanders to carefully consider the effects that they need to create in the operational environment, and how AI or autonomy can enhance these effects or create means of producing them.

Resistance towards the concept of an AI-led warfighting revolution is relevant in guiding expectations regarding the possibilities and challenges posed by operational AI. However, it does not diminish the importance of developing warfighting AI applications and governance systems. While commentators may argue that AI is incapable of significantly affecting the conduct and effectiveness of military operations, the basis for such doubts is generally the lack of existing command and organisational structures to accommodate this technology.[34] Addressing these concerns requires a broad and effects-focused understanding, among commentators and military commanders alike, of the human decision points and authorities that must be present within the capability lifecycle to safely and responsibly exploit AI and autonomy. Deep consideration of these human decision points facilitates the development of AI and autonomous systems that align with ADF doctrine and policy, and provide assurance around the responsible use of these systems by commanders and operators, including their abilities and limitations.

[11] Australian Army, Robotics and Autonomous Systems Strategy, pp. 13–14; Alex Neads, Theo Farrell and David J Galbreath, ‘Evolving towards Military Innovation: AI and the Australian Army’, Journal of Strategic Studies 47, no. 4 (2023): 13.

[12] Australian Army, Robotics and Autonomous Systems Strategy, pp. 13–14; Neads, Farrell and Galbreath, ‘Evolving towards Military Innovation’, p. 13.

[13] Australian Army, Robotics and Autonomous Systems Strategy, pp. 17–29.

[14] Alex Neads, David J Galbreath and Theo Farrell, From Tools to Teammates: Human-Machine Teaming and the Future of Command and Control in the Australian Army, Australian Army Occasional Paper No. 7 (Canberra: Australian Army Research Centre, 2021).

[15] Australian Army, The Australian Army Contribution to the National Defence Strategy 2024 (Canberra: Australian Army, 2024).

[16] Ibid.

[17] Avi Goldfarb and Jon R Lindsay, ‘Prediction and Judgment: Why Artificial Intelligence Increases the Importance of Humans in War’, International Security 46, no. 3 (2022): 39–40.

[18] ‘Army’s Autonomous Truck Convoy a First’, Department of Defence (website), 9 June 2023, at: https://www.defence.gov.au/news-events/news/2023-06-09/armys-autonomous-truck-convoy-first

[19] Australian Army, Robotics and Autonomous Systems Strategy, pp. 17–29.

[20] David E Johnson, The Importance of Land Warfare: This Kind of War Redux, The Land Warfare Papers No. 117 (Arlington VA: The Institute of Land Warfare, 2018), pp. 2–4, 8.

[21] Amitai Etzioni (with Oren Etzioni), ‘Pros and Cons of Autonomous Weapons Systems’, in Amitai Etzioni, Happiness is the Wrong Metric: A Liberal Communitarian Response to Populism (Cham: Springer, 2018), pp. 253–254.

[22] Australian Army, Robotics and Autonomous Systems Strategy, pp. 7–8.

[23] Australian Government, Integrated Investment Program 2024 (Canberra: Commonwealth of Australia, 2024), p. 92.

[24] Andrew Carr, ‘Owning Time: Tempo in Army’s Contribution to Australian Defence Strategy’, Australian Army Journal 20, no. 1 (2024).

[25] Neads, Galbreath and Farrell, From Tools to Teammates, pp. 10–11.

[26] Rossiter and Layton, Warfare in the Robotics Age, p. 37; Andrew Ilachinski, AI, Robots, and Swarms: Issues, Questions, and Recommended Studies (Center for Naval Analyses, 2017), pp. 232–233.

[27] Bérénice Boutin, ‘State Responsibility in Relation to Military Applications of Artificial Intelligence’, Leiden Journal of International Law 36 (2023): 136; Jonathan Tan Ming En, ‘Non-Deterministic Artificial Intelligence Systems and the Future of the Law on Unilateral Mistakes in Singapore’, Singapore Academy of Law Journal 34, no. 1 (2022): 93–94.

[28] Boutin, ‘State Responsibility in Relation to Military Applications of Artificial Intelligence’, p. 136; Tan Ming En, ‘Non-Deterministic Artificial Intelligence Systems’, pp. 93–94.

[29] Jean-Marc Rickli and Federico Mantellassi, ‘Artificial Intelligence in Warfare: Military Uses of AI and Their International Security Implications’, in The AI Wave in Defence Innovation: Assessing Military Artificial Intelligence Strategies, Capabilities, and Trajectories (Oxford: Routledge, 2023), pp. 16–17.

[30] Tan Ming En, ‘Non-Deterministic Artificial Intelligence Systems’, pp. 92–94.

[31] Rickli and Mantellassi, ‘Artificial Intelligence in Warfare’, pp. 16–17.

[32] Ibid., pp. 16–17.

[33] Borchert, Schütz and Verbovszky, Beware the Hype, pp. 6, 62.

[34] Rickli and Mantellassi, ‘Artificial Intelligence in Warfare’, pp. 12–13.

Directing attention to the effects that commanders aim to generate in the land domain also provides valuable opportunities to consider potential asymmetric advantages and countermeasures against similarly capable adversaries. The development and use of military AI is a current and continuing international focus. Consequently, Australia’s security interests may be at risk within a potential future conflict scenario should it fail to develop operational AI capabilities, along with the organisational policies and frameworks that enable its effective development and management. The post-Cold War military technological advantage enjoyed by US-aligned nations is waning as contemporary revisionist states develop similarly advanced technologies. In the emerging security environment, Australia faces a military landscape of increased power projection costs and fewer strategic options. With AI and autonomy as a technology of significant global interest with major potential benefits, Australia’s pursuit of military automation represents a means to ensure advantage and protect strategic interests in a landscape of enhanced strategic competition.[35]

Defence’s strategic response to challenges to international stability, and to the accelerated development of edge technological capabilities, should inform Army’s outlook for land domain AI-enabled and autonomous systems. The 2024 National Defence Strategy emphasises that ‘the greatest gains in military effectiveness in the coming decade will be generated by better integrating existing and emerging technologies’[36]. Further, it prioritises the rapid translation of disruptive edge technologies such as AI into ADF capability.[37] In alignment with this broader Defence direction, Army seeks to build and deploy mechanisms that effectively harness AI and autonomy.[38] Army intends for these AI-enabled capabilities to provide Australia’s land forces with asymmetric warfighting advantage, or to offset potential adversary advantages by contributing to five key capability offset areas that will enhance Army effectiveness. These include maximising soldier performance, improving decision-making, generating mass and scalable effects, protecting the force, and increasing efficiency.[39] These AI-enabled benefits would enhance Army’s ability to achieve its national priorities, such as expanding its deployable strike capability and improving its ability to secure and control strategic land positions.[40]

As with all military capabilities, the effective development and deployment of land domain AI is dependent on the establishment of sufficient underlying systems of control and governance. These systems ensure that these technologies are developed and used efficiently and in line with broader Defence priorities. Simultaneously, they ensure accountability, safety, and adherence to legal obligations across their lifecycles. These processes increase the effectiveness of technologies and capabilities, both by themselves and within larger warfighting systems. By ensuring that they will perform to the expected standard, stakeholders - from end users to Defence and government decision-makers - will more willingly develop appropriate trust in the systems.[41] By contrast, the absence of trust in a technology’s ability to operate as anticipated will severely limit end users’ willingness to use the technology. Decision-makers will also be less willing to approve the technology’s acquisition and use, regardless of its value in instances when it works correctly.[42]

[35] Neads, Galbreath and Farrell, From Tools to Teammates, p. 10.

[36] Australian Government, National Defence Strategy 2024 (Canberra: Commonwealth of Australia, 2024), pp. 15, 38, 65.

[37] Ibid., pp. 15, 38, 65.

[38] Australian Army, Robotics and Autonomous Systems Strategy, pp. 7–8.

[39] Ibid., pp. 7–8.

[40] National Defence Strategy, pp. 38–40.

[41] Australian Army, Robotics and Autonomous Systems Strategy, pp. 25–26; Jon Arne Glomsrud and Tita Alissa Bach, The Ecosystem of Trust (EoT): Enabling Effective Deployment of Autonomous Systems through Collaborative and Trusted Ecosystems (Høvik: Group Research and Development, DNV, 2023).

[42] Neads, Galbreath and Farrell, From Tools to Teammates, pp. 39–44.

In the previous section, this article contended that the effective development and use of AI and autonomy necessitates the implementation of AI management and governance schemes. Defence is aware of this need and has gone some way towards implementing appropriate systems at the enterprise level. Among these efforts, Defence has established a Defence-wide AI governance work area, conducted various responsible AI workshops, and initiated development of cross-domain AI-governing policies.[43] These efforts represent an attempt to approach military AI governance from a largely domain-agnostic, whole-of-Defence position. Specifically, they do not substantially differentiate between operational (warfighting) and enterprise (commercial) or domain-specific applications of AI. This is despite substantial differences in use cases, intended effects and outputs, and risk profiles. As will be discussed, this approach to AI governance may be inappropriate when applied to AI used in operations within the land domain.[44]

It is impractical to perform governance and management of AI intended for both operational and non-operational use under the same policy frameworks. The key reason for this is the relative disparity between their use cases and their risk profiles. For example, common enterprise applications (such as large language models and enterprise data processing) are unlikely to directly cause injury or death should they operate in a way that is unintended. As such, AI governance in enterprise settings - including outside of Defence - generally prioritise the protection of civil liberties and workplace health and safety concerns rather than focussing on potential risks to life. By comparison, military operational AI is intended for use in competition and conflict, where it is probable that the safety and lives of Australian personnel will be under direct threat from hostile entities. Should an AI or autonomous system fail within these high-risk situations, it is likely to result in unintentional injury or death, whether among the friendly force or the civilian population present within the battlespace.[45] These safety concerns also extend to civilian populations and objects in the operational environment, which may be harmed should operational capabilities produce an unintended output.[46]

AI technology and governance that is intended for operational use by/for the ADF must align with ADF doctrine and relevant land-specific doctrine. While doctrine is not legally binding, failure to align with it risks placing potential technologies and their bespoke governance processes outside of well-established military planning, conduct and evaluation frameworks. This situation may compromise the ADF’s ability to integrate these systems into existing warfighting processes and procedures.[47]

Recommendation 1: Given the different risk thresholds applied to enterprise compared to operational uses of AI, Defence should generate distinct governance frameworks for each, or ensure their distinct treatment within a shared governance framework.

The highly variable physical aspects of terrain within the land domain creates unique complexities and uncertainties for the development and use of AI and autonomous systems. Compared to the land domain, the physical features of the air and maritime domains remain relatively homogenous across different theatres and over time.[48] As such, an AI or autonomous system operating within these domains needs to adapt to only a comparatively small quantity of physical features. Further, when traversing from one point to another, the operator of an air or maritime platform does not require moment-to-moment consideration of obstacles within its environment.[49] In contrast, land forces must be prepared to operate across the topographic environments of jungles, mountains, deserts, tundra, littorals, swamps, forests and manmade settlements, often with several different topographies located in close proximity to one another.[50] The land domain is uniquely cluttered, with a density of physical obstacles that is not present in the air or on the sea surface. Obstacles such as trees, mountains, buildings and general variations in topographical elevation create particular complexity when navigating and interacting with the environment.[51] This element of variability necessitates a high degree of adaptation by land forces, as different terrains present different challenges. Similarly, land domain AI must be able to adapt to and function across these highly variable topographies if they are to be used effectively by commanders in Army operations.[52] This situation generates a substantially greater burden on an autonomous system’s AI than an equivalent system operating in the air or sea domain.

The challenges for AI capabilities that are generated by the varied physical complexity of the land domain relate both to hardware (designing the right platforms to accommodate AI and autonomous capability) and to software (to train algorithms on a dataset representative of the diversity in the land domain). To illustrate this point, consider autonomous systems that interact with their environment, such as by moving or shooting. Their capacity to collect, analyse and make decisions based on information within their environmental context enables them to act with minimal human involvement within their operational decision-making loop. However, a complex physical environment means that there is a greater risk that this decision-making will fail or be subject to miscalculation. This problem extends to how the AI interacts with the autonomous system’s sensors, which are often unsuited to managing the land domain’s environmental complexity. For example, autonomous ground robots currently struggle to visually perceive and interpret ‘negative obstacles’ such as ditches, holes and puddles. In an operational environment, these robots may not treat these ‘negative obstacles’ as obstacles at all, and may instead unintentionally move into them.[53] To overcome such deficiencies, land domain AI systems will require considerable land-application-specific training data and ML systems in order to make accurate and representative generalisations.[54] At present, Army has only a limited capacity and responsibility to generate such representative land-domain-specific datasets.

Recommendation 2: Frameworks governing the development and use of land domain AI should specifically consider the impact of the physical environment on AI-enabled systems’ operational functionality.

Recommendation 3: Opportunities to increase the generation of land-domain-specific datasets should be examined, including the identification of service-level ownership and resourcing.

The increased levels of human - and particularly civilian - interaction inherent to land theatres further complicates the operational use of land AI and autonomous systems. The land domain is ‘the domain where humans live, and operating there almost certainly results in human interaction’.[55] An AI-enabled autonomous system intended for deployment in the land domain must therefore be developed and used with a consideration of the complexities and risks associated with AI–human interaction.

One such challenge is the increased risk of a military operation resulting in collateral damage to civilians and civilian objects. This risk is higher in the land domain, where civilians are predominantly located, than in the air or sea, where they are not. The application of international humanitarian law (IHL), as it relates to requirements of proportional application of military force and the protection of civilian individuals and objects, is particularly relevant here. The involvement of operational AI in the potentially lethal application of force is a use case that is beyond an enterprise scope, but is fundamental to military operations. This significant difference in use cases and their consequences necessitates consideration under a different governance system than enterprise applications, in the same manner that systems of control apply to the use of force in operational contexts more broadly.

Performing military operations in the land domain that contains a significant number of civilians necessitates unique considerations of risk. In the sea or air domain, military personnel can have greater confidence that a person within their operating environment is a combatant, particularly if they are using military platforms. The situation is different for land operations, particularly when armies are dealing with irregular adversaries capable of concealing themselves within the civilian population and operating within civilian-dense areas, in order to limit the use of kinetic force against them.[56] In these environments in particular, armies need suitably thorough risk management and governance protocols regarding the use of technology (that has the capacity to cause or contribute to a harmful action, including AI) in order to ensure their compliance with the laws of armed conflict and to protect the perceived legitimacy of military operations in the eyes of the international community.

Recommendation 4: Land-domain-specific AI governance frameworks should directly address the complexities entailed in AI operations conducted within civilian-dense environments.

AI applications generally struggle to respond appropriately to unpredictable stimuli that are not adequately represented in training datasets. It is therefore difficult for AI to effectively detect, analyse and respond to human behaviour, which is highly heterogeneous and unpredictable in nature.[57] This applies both to interactions with civilians and enemy combatants in the land environment and to interactions with friendly forces, including AI system operators.

The spectrum of behaviours a human can perform is limited in instances when their interaction with the outside world occurs via a platform that they control, and is constrained by the capabilities of that platform. For example, a fighter jet is specifically designed to include the features necessary to enable its function of flying and shooting. In this context, little attention is given by fighter jet designers to developing capability features that do not assist the performance of this function. As such, a fighter jet can move and shoot unpredictably, but those instances are unpredictable uses of the predictable behaviours of moving and shooting. It is therefore logical to predict that a platform designed to fly and shoot (and do little else) will fly and shoot. In response, an AI capability can be trained to recognise instances of the fighter jet flying and shooting, and to respond accordingly. This characteristic is similarly relevant in the maritime domain.[58]

In comparison, humans (who are fundamentally unpredictable entities with a suite of capabilities not designed specifically for warfighting functions) have the capacity to exhibit entirely unpredictable behaviours. For example, we can expect an enemy combatant to run and shoot, as capabilities that serve their ‘function’. However, it is also entirely possible for the combatant to throw something, or lie down, or fake surrender, or make any number of culturally specific threatening statements or gestures, or perform any other action that can be performed with human faculties. Equally, non-combatants may also exhibit unpredictable behaviours, with nuances that may confound the capacity of an AI to detect, determine intent, and respond appropriately to. Therefore, developing AI systems capable of responding appropriately to the wide variety of potentially unpredictable human behaviours is a uniquely challenging endeavour within the human-centric land domain. For example, an AI operating amongst civilians will not have the intuitive ability to distinguish a civilian throwing a ball from a combatant throwing a grenade, or a combatant holding a firearm from a civilian holding a toy firearm. Further, adversaries seeking operational advantage will take every opportunity to disrupt Army’s ability to understand and operate in a deployed environment. There is legitimate concern, therefore, that an AI presented with behavioural ambiguity may produce unintended behaviours or outputs that are inappropriate to the situation. Such outputs risk compromising its intended mission or causing unintended harm to civilians.[59]

Human unpredictability also complicates the involvement of AI in HMT. HMT is not unique to the land domain. However, the context of the land domain does create a unique level of challenge due to individual Army personnel taking primacy over platforms as the smallest unit of force. This informs the intention of Army to ‘equip the operator’ over ‘operating the equipment’.[60] There consequently exists interest in performing HMT at the lowest level, involving relatively junior Army personnel using AI systems at the tactical edge. This creates the potential scenario in which thousands of AIs must struggle to predict and adapt to the continuous unpredictable and heterogeneous behaviours and decisions of thousands of individual personnel. In this scenario, an individual failure or miscalculation has the potential to both threaten life and compromise the operation as a whole.[61]

There are methods available to reduce the behavioural unpredictability of Army personnel. For example, training, doctrine and accountability assist in this regard by encouraging specific favourable actions and responses, and discouraging unfavourable actions and responses. Further, the fact that AI can provide instructions and suggestions to its operator may assist in further limiting the unpredictability of individual Army personnel.[62] More broadly, however, as a key input to AI and a determinant of AI outputs, human behaviour must be treated by Army as an inherent part of AI systems. Recognising the personnel-focused operations of the land domain, the RAS Strategy 2.0 reinforces the importance of developing AI and autonomous technology to maximise the performance of the individual. As one example, it suggests that AI systems ‘will seamlessly fuse different sources of data and intelligence to alert, communicate, and suggest courses of action to dismounted soldiers’.[63] Achieving this outcome will be a complex endeavour. It will require Army to govern and assure human interaction with AI in tandem with managing the technology itself. While the possibility of discord between the soldier and their AI can be managed, the heterogeneity of human behaviour means that it cannot be eliminated.

Recommendation 5: Frameworks governing the development and use of land domain AI should specifically consider the impact of human unpredictability on AI-enabled systems’ operational functionality.

Recommendation 6: Army should invest in testing and simulating a broad spectrum of possible human inputs in order to minimise the residual risks of using AI-enabled capabilities on operations.

The urban operational environment exemplifies the most challenging aspects of the land domain. Its physical, human and informational terrains are inherently highly complex. For example, the high density of buildings and other man-made obstacles within an urban theatre significantly limits the sensor range and navigational freedom of land forces operating within it. Further, the physical terrain of urban environments is constructed by populations with highly diverse cultures and levels of development. This population influence results in a significant diversity of physical characteristics between different urban environments that may influence military operations. Equally, the high density of civilians within urbanised areas creates complexities regarding the form and extent of kinetic force available to land forces, and the manner in which ethnic, religious, political and ideological populations within the broader urban population will interact with land forces and one another.[64]

The urban environment is increasingly the centre of gravity for land warfare; this presents further complications for the use of AI and autonomous systems in a potential future land conflict.[65] Modern trends of population growth and urbanisation have caused urban populations to expand significantly. In Army’s Contribution to the National Defence Strategy document, the global urban population is predicted to double between 2024 and 2044.[66] This trend is particularly noticeable in many Oceanic nations in Australia’s near region, in which a majority of the population reside in coastal cities.[67] This trend motivates both conventional and unconventional forces to fight in, and establish control of, urban areas. For unconventional forces, the large urban population centres provide proximity to potential support bases, an environment within which to obscure themselves, and a form of ‘normative shield’ that limits the kinetic options of a conventional opponent military concerned with human rights and international law.[68] Conventional state land forces, on the other hand, have an interest in taking or holding territory with the greatest strategic benefit to either side, as well as controlling and protecting relevant populations. The infrastructure, facilities and high population of urban centres make them particularly advantageous for state forces to control.[69]

The density of these physical and human factors means that land forces within urban environments are forced to interact with, and make decisions related to, the contents of the physical and human terrain more frequently and in less time.[70] This places greater demands on AI and autonomous systems in comparison to operations conducted in the low-density domains of air and sea. By necessitating a greater number of time-sensitive decisions, urban operations expose AI systems to a greater number of instances where they may fail. This risk is particularly relevant when considered alongside the challenges AI faces in analysing and responding to the complex and unpredictable behaviours of humans, who comprise the high-density human terrain of urban environments.

Electromagnetic interference is a challenge to AI technology in both urban and non-urban environments. For example, in megacities, the dense, complex and sprawling urban terrain, tall vertical structures such as skyscrapers and high-rise buildings, and underground structures such as concrete tunnels create significant interference in the electromagnetic spectrum (EMS) environment of land operations. This is because the infrastructure increases distances and obstacle density between communication senders and receivers. Equally, electromagnetic interference will occur in non-urban environments too. For example, mountainous terrain, dense forests and foliage, and cave systems may also physically interfere with EMS communication.[71] It is therefore necessary for the frameworks governing the development and use of land domain AI to account for AI behavioural risk regarding potential instances in which a system’s connection to its operator or network is environmentally compromised. While increased autonomy presents a possible redundancy for a loss of direct control, thorough assurance is required to validate that autonomous AI systems can continuously fulfil command intent and produce responsible behaviours and outputs across the deployment lifecycle.

The consequences of Army AI failures within an urban environment have the potential to be significantly damaging to the local population, Army’s strategic objectives and Australia’s global reputation.[72] As of 2023, approximately 57 per cent of the global population are connected to the internet. Of this 57 per cent, approximately 86 per cent possess internet-capable smartphones.[73] Consequently, land forces operating in civilian-dense urban environments will struggle to control or limit the rapid dissemination of information by civilians via digital avenues such as social media.[74] This would include information regarding operational AI system failure. The substantial presence of digitally connected civilians consequently amplifies the reputational consequences if land forces in urban environments cause collateral damage.[75]

Recommendation 7: The frameworks developed to govern the performance of AI and autonomous systems must account for the unique challenges and risks of operating within high-density, high-complexity land environments.

Land forces are particularly capable of acquiring and maintaining control over territories and populations in a persistent manner in a way that operations conducted in other domains are not.[76] Therefore, during a hypothetical future large-scale conflict occurring in Australia’s near region, AI-enabled systems may allow broader dispersal of the land force to achieve greater control and presence across a wider operating area. This includes assisting in persistent regional surveillance to support the detection and disruption of an adversary’s movements in, and ability to access or control, a region of interest.[77] Commanders may also expect AI-enabled systems to persist within contested physical territory, significant distances from an Australian or allied army base, for extended periods.

Questions arise, however, regarding the maintenance of AI and autonomous systems during extended operations within the field. Army’s access to the EMS within an active land conflict zone will inevitably be contested, degraded or denied, both deliberately by adversarial actors and incidentally by complex physical terrain.[78] Land domain AI and autonomous systems must be able to operate within such an unreliable EMS environment. In some cases, deployed AI and autonomous systems in remote locations will be unable to reliably receive regular updates or maintenance from bases of operations over a digital network. Should an AI platform suffer from a critical vulnerability or serious technical problem, the inability to resolve such issues within the field may cause the AI to operate in a manner that compromises operations or causes unintended damage.

Further, as ML systems evolve over time, they are liable to deteriorate in performance and accuracy. This is commonly known as model degradation. This degradation may be caused by a mismatch between training data and the problem space of the system’s operation. A model may also degrade should the operational environment increase in variance and complexity over time. In such a scenario, a single model will be unable to accurately respond to all possible environmental inputs. An ML model that is unaffected by model degradation will typically receive a specific set of inputs, and then process them using an algorithm that translates those specific inputs into desirable outputs. Should the distribution of inputs to an ML model change over time—a process referred to as concept drift—the ML model may begin producing incorrect outputs by applying previously applicable calculations to inapplicable new data. In such instances, ML models may need manual adjustment to remain effective.[79]

Without regular and reliable re-baselining and maintenance within the field, ML-enabled land capabilities will be vulnerable to model degradation and concept drift. This risks compromising command and control, and weakening the operational effectiveness and safety of land domain AI systems within remote locations or contested environments. The dynamic nature of the land domain exacerbates this issue; the changes in input that accelerate model degradation are likely to occur more frequently in a domain dense with dynamic human and terrain factors. Further, the comparatively high likelihood of civilian presence near a potentially compromised AI system elevates the risk of deploying AI-enabled capabilities without reliable means of maintenance and update.[80] One potential method of managing the challenge of autonomous systems persistence in the land domain would be to ensure that such in-field land AI systems are regularly maintained and updated. However, the previously discussed degradation and unreliability of EMS communications by physical terrain and human actors within the land domain limits the practicality of this solution.[81]

Recommendation 8: Army would benefit from governing land domain AI systems in a manner appropriate to their potential value; this would necessarily require that consideration is given to the unique challenges and opportunities in achieving AI persistence within theatre.

Land AI systems will be deployed in operational contexts that are highly dynamic—where the environments, capabilities and adversaries to which they must respond change over time. To respond appropriately to operational events in the field, AI systems developed using ML techniques will rely on the quality of the training datasets embedded within them. If the AI’s training data does not reflect environmental changes on the battlefield, it will respond to novel inputs with suboptimal outputs.[82] Case studies from corporate use of AI technology have shown that the quality of training data is compromised if it is outdated, biased, lacking in quantity, poorly labelled etc. For example, a lack of representation of certain subgroups within AI training data has previously resulted in corporate job recruitment AIs developing biases against female candidates, and facial recognition software failing to accurately recognise certain under-represented races.[83]

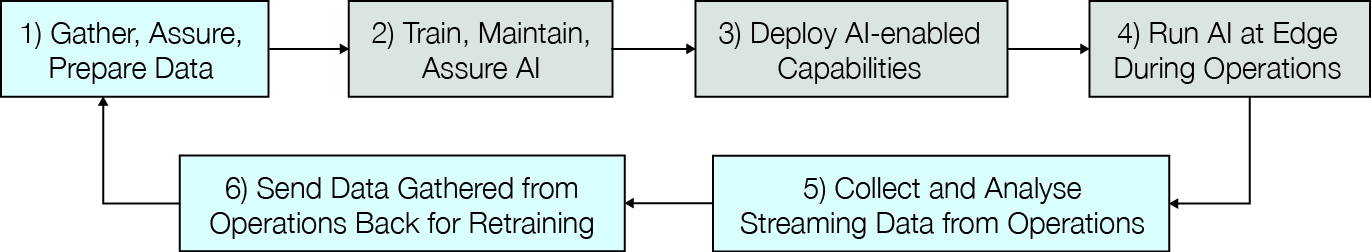

To address changes in operational contexts as they arise, and to avoid the degradation of AI training data, Army needs the ability to continually adapt AI models and training datasets. By closely aligning AI training with likely operational conditions, the operational effectiveness and reliability of AI-enabled capabilities would be enhanced.[84] Below is a simplified representation of a suggested cyclical approach to operational AI development, deployment and assurance.

Figure 1: Simplified representation of cyclical approach to operational AI development, deployment and assurance[85]

While this model would be equally applicable across the sea and air domains, there are several reasons why it is particularly relevant to land operations. For one, the physical characteristics of the land domain, and the relative human density, present a more expansive suite of potential data inputs. This fact, combined with the dynamic nature of the land domain, also creates high potential for rapid changes to context over time. This situation necessitates a significantly greater quantity of training data to ensure ML training represents the likely contexts in which the AI-enabled capability will operate.[86] For example, an autonomous vehicle may navigate an urban environment by collecting environmental data and orienting itself based on the detection of certain landmarks. However, should the physical environment be disrupted, the autonomous system will struggle to navigate using training data that has become inconsistent with the contemporary reality. Adversaries could therefore readily disrupt AI systems using methods as simple as removing street name signs, or as dramatic as launching a series of missile strikes that demolishes buildings and landmarks.

Further, as mentioned previously, humans are capable of exhibiting a broader range of unpredictable behaviours when not bound by the designed functions of crewed capabilities, which dominate the air and maritime domains.[87] This unpredictability creates the potential for rapid change in operating procedures and behaviours within the land domain, as there is a larger number of potential behaviours that soldiers can perform when dismounted. The land domain’s reliance on the soldier as the smallest warfighting unit, rather than a smaller number of large and expensive air or sea vessels, enables these evolutions to occur on the level of the individual soldier or section. This could take forms as simple as modifying one’s uniform to differentiate it from typical adversary uniforms, or painting a combat vehicle an unconventional colour to avoid recognition by an AI system.[88]

Instances in which AI has been confounded at the individual level have already occurred within non-war contexts. For example, during the 2019 Hong Kong protests, many protesters wore masks in a deliberate effort to confound state facial recognition software. The state government tacitly acknowledged this weakness of its AI in its efforts to ban the use of face masks by protesters.[89]

Recommendation 9: Governance frameworks must be sufficiently flexible to enable training and datasets to evolve.

[43] ‘Responsible AI for Defence (Consultation)’, Trusted Autonomous Systems (website), 2024, at: https://tasdcrc.com.au/responsible-ai-for-defence-consultation/; Kate Devitt, Michael Gan, Jason Scholz and Robert S Bolia, A Method for Ethical AI in Defence (Canberra: Defence Science and Technology Group, 2020).

[44] Johnson, The Importance of Land Warfare, pp. 5–6.

[45] Australian Army, Robotics and Autonomous Systems Strategy, pp. 15–16.

[46] National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges in Artificial Intelligence-Enabled Systems for the Department of the Air Force (Washington DC: The National Academies Press, 2023), p. 52; Brian A Haugh, David A Sparrow and David M Tate, The Status of Test, Evaluation, Verification, and Validation (TEV&V) of Autonomous Systems (Alexandria VA: Institute for Defense Analyses, 2018), p. 4.

[47] Defence Doctrine Directorate, ADF-C-0 Australian Military Power (Canberra: Defence Directorate of Communications, Change and Corporate Graphics, 2024), pp. 1–3.

[48] Australian Army, Land Warfare Doctrine 1: The Fundamentals of Land Power (Canberra: Department of Defence, 2017), p. 10.

[49] Rossiter and Layton, Warfare in the Robotics Age, p. 32.

[50] Johnson, The Importance of Land Warfare, pp. 5–6; Australian Army, Land Warfare Doctrine 1, p. 10.

[51] Australian Army Contribution to the National Defence Strategy, p. 24.

[52] Johnson, The Importance of Land Warfare, pp. 5–6.

[53] Rossiter and Layton, Warfare in the Robotics Age, p. 37.

[54] National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges, pp. 37–39.

[55] Johnson, The Importance of Land Warfare, p. 5.

[56] Ibid., pp. 2–4, 8.

[57] Ilachinski, AI, Robots, and Swarms, pp. 232–233; Desta Haileselassie Hagos and Danda B Rawat, ‘Recent Advances in Artificial Intelligence and Tactical Autonomy: Current Status, Challenges, and Perspectives’, Sensors 22, no. 24 (2022), p. 12.

[58] Rossiter and Layton, Warfare in the Robotics Age, p. 32.

[59] Forrest E Morgan, Benjamin Boudreaux, Andrew J Lohn, Mark Ashby, Christian Curriden, Kelly Klima and Derek Grossman, Military Applications of Artificial Intelligence: Ethical Concerns in an Uncertain World (Santa Monica CA: RAND Corporation, 2020), p. 22.

[60] Defence Science and Technology Group, Shaping Defence Science and Technology in the Land Domain 2016–2036 (Canberra: Department of Defence, 2016), p. 6.

[61] Ilachinski, AI, Robots, and Swarms, pp. 232–233; Hagos and Rawat, ‘Recent Advances in Artificial Intelligence and Tactical Autonomy’, p. 12.

[62] Australian Army, Robotics and Autonomous Systems Strategy, p. 9.

[63] Ibid., p. 9.

[64] Patrick van Horne and Jason A Riley, Left of Bang: How the Marine Corps’ Combat Hunter Program Can Save Your Life (Black Irish Entertainment LLC, 2014).

[65] Rick Burr, Army in Motion: Accelerated Warfare Statement (Canberra: Australian Army, 2020).

[66] Australian Army Contribution to the National Defence Strategy, p. 25.

[67] Ibid., p. 25.

[68] Mikael Weissmann, ‘Urban Warfare: Challenges of Military Operations on Tomorrow’s Battlefield’, in Mikael Weissmann and Niklas Nilsson (eds), Advanced Land Warfare: Tactics and Operations (Oxford: Oxford University Press, 2023), pp. 128–129; Johnson, The Importance of Land Warfare, pp. 2–4, 8.

[69] Johnson, The Importance of Land Warfare, pp. 2–4, 8; Gian Gentile, David E Johnson, Lisa Saum-Manning, Raphael S Cohen, Shara Williams, Carrie Lee, Michael Shurkin, Brenna Allen, Sarah Lovell and James L Doty III, Reimagining the Character of Urban Operations for the U.S. Army: How the Past Can Inform the Present and Future (Santa Monica CA: RAND Corporation, 2017), pp. ix–x.

[70] David Betz and Hugo Stanford-Tuck, ‘The City is Neutral: On Urban Warfare in the 21st Century’, Texas National Security Review 2, no. 4 (2019).

[71] Weissmann, ‘Urban Warfare’.

[72] Ibid., p. 145.

[73] Matthew Shanahan and Kalvin Bahia, The State of Mobile Internet Connectivity 2023 (GSMA, 2023), pp. 16–18.

[74] Weissmann, ‘Urban Warfare’.

[75] Ibid., p. 145.

[76] Johnson, The Importance of Land Warfare, pp. 8, 12.

[77] Australian Army Contribution to the National Defence Strategy, pp. 7–8.

[78] Defence Science and Technology Group, Shaping Defence Science and Technology in the Land Domain, p. 10; Weissmann, ‘Urban Warfare’.

[79] Firas Bayram, Bestoun S Ahmed and Andreas Kassler, ‘From Concept Drift to Model Degradation: An Overview on Performance-Aware Drift Detectors’, Knowledge-Based Systems 245, no. 1 (2022): 1–2; Fabian Hinder, Valerie Vaquet, Johannes Brinkrolf and Barbara Hammer, ‘Model-Based Explanations of Concept Drift,’ Neurocomputing 555 (2023).

[80] Weissmann, ‘Urban Warfare’, p. 145.

[81] Defence Science and Technology Group, Shaping Defence Science and Technology in the Land Domain, p. 10; Weissmann, ‘Urban Warfare’.

[82] Maaike Verbruggen, ‘No, Not That Verification: Challenges Posed by Testing, Evaluation, Validation and Verification of Artificial Intelligence in Weapon Systems’, in Thomas Reinhold and Niklas Schörnig (eds), Armament, Arms Control and Artificial Intelligence: The Janus-Faced Nature of Machine Learning in the Military Realm (Cham: Springer, 2022), p.181; National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges, pp. 37–39.

[83] National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges, p. 122; Haugh, Sparrow and Tate, The Status of Test, Evaluation, Verification, and Validation, p. 23.

[84] National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges, p. 52; Haugh, Sparrow and Tate, The Status of Test, Evaluation, Verification, and Validation, p. 4.

[85] National Academies of Sciences, Engineering, and Medicine, Test and Evaluation Challenges, p. 52.

[86] Australian Army, Land Warfare Doctrine 1, p. 10.

[87] Rossiter and Layton, Warfare in the Robotics Age, pp. 32–33, 37.

[88] Goldfarb and Lindsay, ‘Prediction and Judgment’, pp. 39–40.

[89] Yao-Tai Li and Katherine Whitworth, ‘Data as a Weapon: The Evolution of Hong Kong Protesters’ Doxing Strategies’, Social Science Computer Review 41, no. 5 (2023).

The unique challenges entailed in using AI-enabled technology in the land domain require unique responses. There is an inextricable relationship between the AI systems and the environments in which they must operate. This necessitates the generation of governance processes that maximise the effectiveness of their development and that involve domain-specific considerations. That is not to deny the importance of whole-of-Defence AI governance and strategic direction; such guidance provides an important foundation for the development of domain-specific solutions while ensuring their broad alignment with the AI strategic priorities of the integrated force. However, this article contends that Army will benefit from the development of further governance mechanisms that apply Defence-wide direction to the specific characteristics of the land warfare context. Doing so has the potential to considerably improve operational AI outcomes across Army.

As operational AI systems are introduced and proliferate, commanders will be expected to determine the suitability of deploying these systems within various contexts. They will also have to make the final judgement whether to use or not to use these systems, and to take accountability for the consequences of their decisions.[90] Therefore, commanders play an important and uniquely challenging role in land domain AI governance, making time-sensitive and high-risk decisions regarding the use of highly technical systems, generally without access to relevant technical subject matter expertise to inform these decisions. In response to this challenge, and based on the findings of this article, Annex A provides a ‘Commander’s Guide to AI Governance within Land Operations’ for those tasked with analysing the operational use of land domain AI systems.

While the ‘Commander’s Guide’ adds contextual depth and consideration to commanders’ decision-making processes, more can and should be done to improve land domain AI governance across all stages of the capability lifecycle. The intention of this article is to help build a foundation for future research focused on land domain AI and/or military AI governance. By establishing the need for domain-specific operational AI governance, this article informs the deeper analysis that will be needed to generate these new AI governance systems. Such analysis will need to further clarify the features of operational AI that will support the governance processes that exist separately to existing Defence-wide processes for traditional capabilities. Similarly, further work is needed to fully appreciate the national and international contexts in which AI capabilities will need to operate. The time to start this work is now. As global interest and investment in AI-enabled military applications increases, the relative effectiveness of Australia’s land AI systems, and their enabling systems of governance, will play a strong role in determining whether Army’s next operational engagement results in victory or defeat. It will also shape how much time, money and human life will be saved or lost in the process.

[90] Neads, Galbreath and Farrell, From Tools to Teammates, p. 55.

This guide poses questions that highlight factors that may constrain the effective use of AI-enabled capabilities by Army. The questions are intended to challenge commanders’ assumptions and prompt consideration of the AI’s operational suitability as influenced by the numerous relevant contextual and environmental factors.

Application of this guide is intended to help improve the quality of decisions concerning land AI deployment. The guide also assists commanders to calibrate the level of trust that can be placed in the abilities of operational AI systems.

The guide is intended to apply to all levels of command.

Before deciding to use/deploy a land domain AI system, ensure that you have considered the following questions. If you are unsure of the answer to any of these questions, consult further with a relevant subject matter expert (i.e. capability manager, designer, super user, operational legal adviser etc.).